Playing Games in the Dark: An approach for cross-modality transfer in reinforcement learning

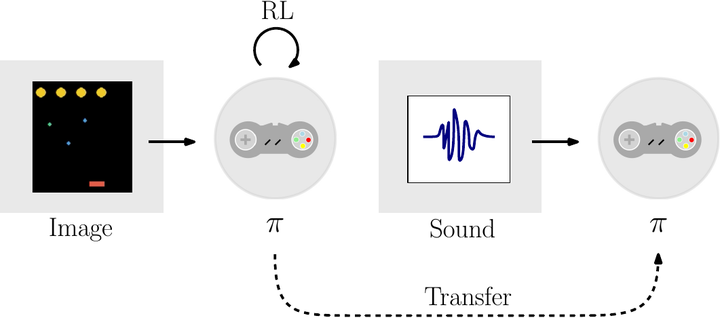

A policy trained over one input modality (videoframes) is transferred to a different modality (sound).

A policy trained over one input modality (videoframes) is transferred to a different modality (sound).

Abstract

In this work we explore the use of latent representations obtained from multiple input sensory modalities (such as images or sounds) in allowing an agent to learn and exploit policies over different subsets of input modalities. We propose a three-stage architecture that allows a reinforcement learning agent trained over a given sensory modality, to execute its task on a different sensory modality-for example, learning a visual policy over image inputs, and then execute such policy when only sound inputs are available. We show that the generalized policies achieve better out-of-the-box performance when compared to different baselines. Moreover, we show this holds in different OpenAI gym and video game environments, even when using different multimodal generative models and reinforcement learning algorithms.